Adam Rida

AI researcher and Data Scientist

ex PhD Candidate in ML at Sorbonne University and AXA | Master's degree in Applied Mathematics from CY Tech

adamrida.ra@gmail.com[download CV] [connect on linkedin]

My name is Adam, and I am a data scientist and AI researcher based in Paris, France, with a strong passion for leveraging artificial intelligence to solve complex, real-world problems.

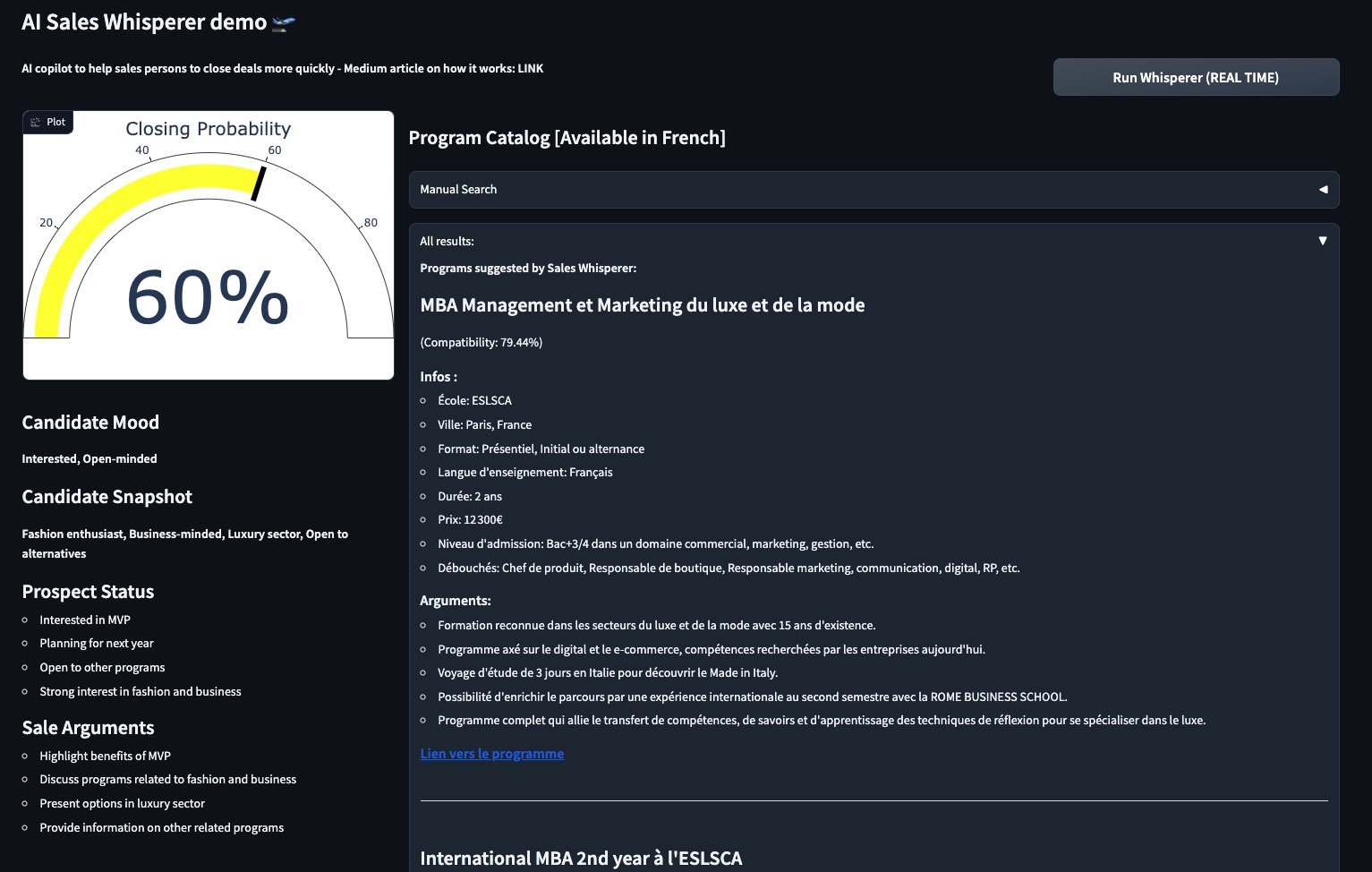

I've worked across various companies including Société Générale, AXA, Qantev, Rebellion Research, and now Autoplay AI. My expertise spans applied machine learning, explainable AI, unsupervised learning, and complex systems modeling.

I previously spent a year as a PhD candidate at Sorbonne University within the Trustworthy and Responsible AI Lab (TRAIL), a collaboration between Sorbonne and AXA. There, I worked on novel methods for explainable AI (XAI) and interpretability, specifically focusing on understanding model dynamics and concept drift. My research led to a publication at the DynXAI workshop of ECML-PKDD 2023.

Driven by the desire to build impactful solutions, I joined Entrepreneur First to co-found a startup aimed at helping private equity deal teams screen deals faster. Through advanced RAG techniques (GraphRAG, Graph Neural Networks), I developed a proprietary document parsing tool capable of extracting actionable insights from over 3,500+ pages of documents. Within two months, I built three live prototypes showcased to 100+ private equity funds.

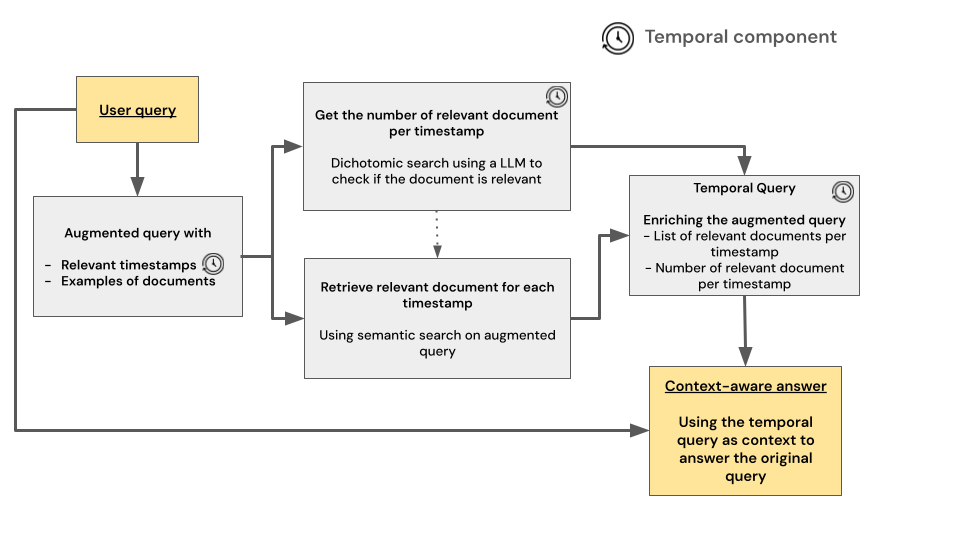

Currently, I am the Head of AI at Autoplay AI, where I designed and built a comprehensive AI inference and processing pipeline from scratch to understand user behavior. This involved analyzing events, mouse activity, and various interactions to infer hesitation, intention, knowledge gaps, and more. I also developed sophisticated evaluations and unsupervised learning models to detect user cohorts struggling with product adoption and created augmented search capabilities across sessions to uncover hidden insights.

I hold an Engineering Degree (Master's) from CY Tech in France, and previously completed the rigorous Ecole 42 coding program, where I refined my programming skills through intensive, project-based learning.

Beyond AI and data science, I'm passionate about aviation and am actively working towards obtaining my Private Pilot License (PPL) in France.

Areas of Interest:

- - Explainable AI (XAI), Concept Drift, and AI-Human Interaction

- - Outlier Detection & Unsupervised Learning

- - Deep Learning & Latent Representations

- - LLMs & Their Application to Advanced NLP Tasks (RAG, Document Parsing, Knowledge Representation)

- - Transdisciplinary Approaches to High-Impact Problems

Publications and Blogs

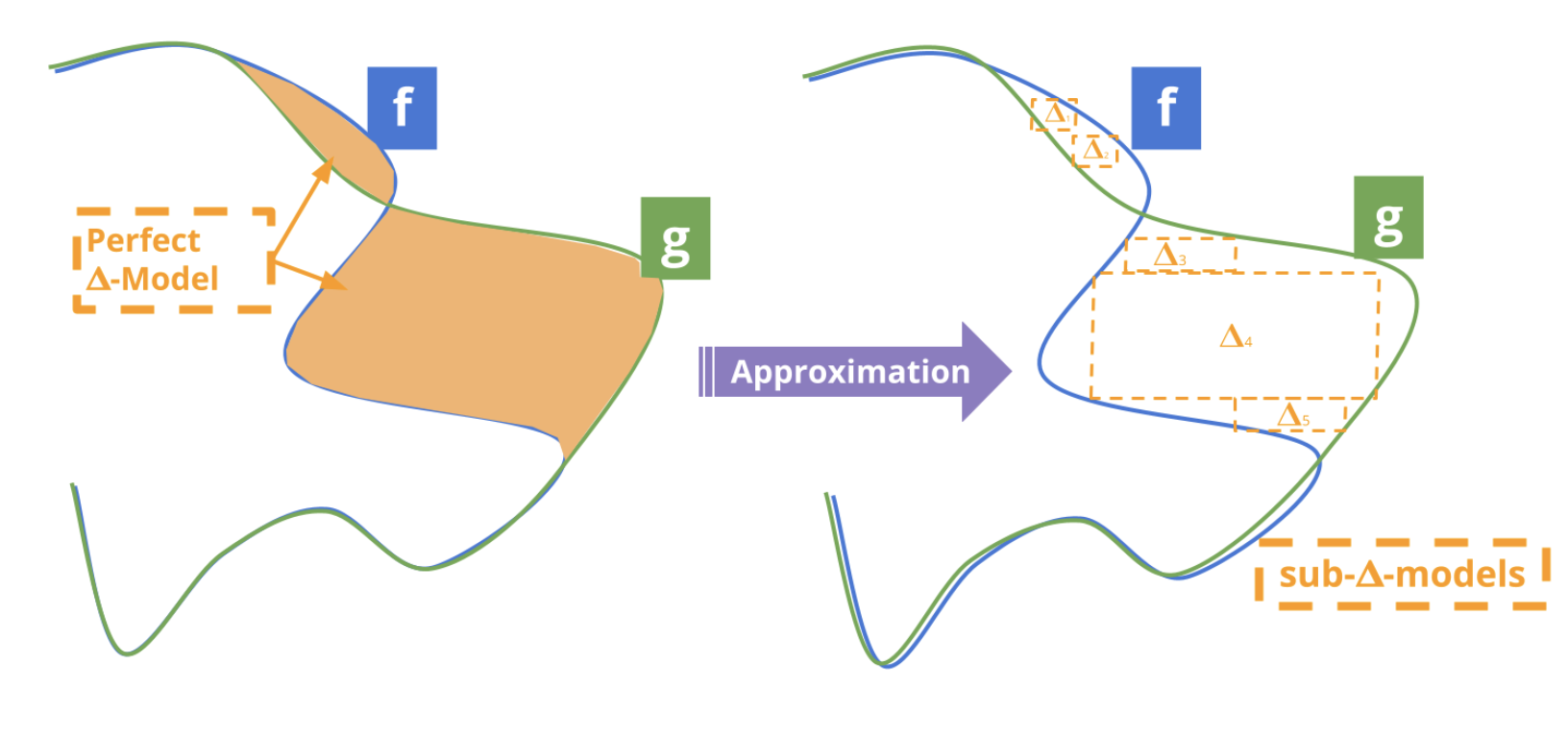

TL;DR: The DeltaXplainer paper introduces a method to explain differences between machine learning models in an understandable way. It uses interpretable surrogates to identify where models disagree in predictions. While effective for simple changes, it has limits in capturing complex differences. The paper explores its methodology, limitations, and potential for improving understanding in model comparisons.

[arxiv] [github] [python package] [blog post]

[ssrn] [github]

[blog post]

[blog post]

Open Source Projects